Elasticsearch 5.5 测试 smartcn 分析器

通过 curl 工具可以测试 smartcn 分析器的分词效果(如何安装 smartcn 分析器请参考 这篇博客)。

curl Windows 版下载地址:https://curl.haxx.se/download.html

如下是 Linux 下的命令,Windows 下是不支持单引号参数的,而且也不支持换行。

sh

curl -XGET 'localhost:9200/_analyze?pretty' -H 'Content-Type: application/json' -d'

{

"analyzer" : "ik_smart",

"text" : ["学然后知不足", "教然后知困"]

}

'需要修改成双引号的形式,去掉换行,且字符串中的双引号需要添加转义符 \ 。

bash

curl -XGET "localhost:9200/_analyze?pretty" -H "Content-Type: application/json" -d"{ \"analyzer\" : \"smartcn\", \"text\" : [\"学然后知不足\", \"教然后知困\"] }"执行会报如下错误:

json

{

"error" : {

"root_cause" : [

{

"type" : "illegal_argument_exception",

"reason" : "Failed to parse request body"

}

],

"type" : "illegal_argument_exception",

"reason" : "Failed to parse request body",

"caused_by" : {

"type" : "json_parse_exception",

"reason" : "Invalid UTF-8 start byte 0xba\n at [Source: org.elasticsearch.transport.netty4.ByteBufStreamInput@53b7c81e; line: 1, column: 43]"

}

},

"status" : 400

}上面的命令格式是对的,如果 text 值为英文的话是可以正确执行的。

原因在于中文字符的编码格式不是 UTF-8 的。

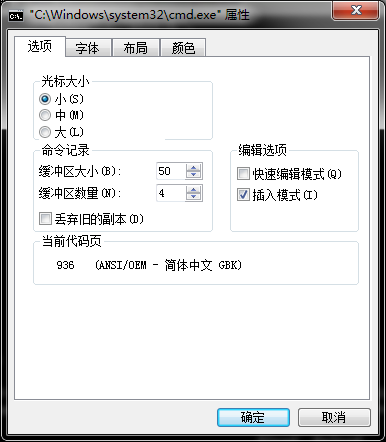

可以通过 右键命令行窗口标题 -> 属性 查看命令行窗口的编码。

通过 chcp 65001 命令可以修改为使用 UTF-8 编码,但还是没能成功解析。

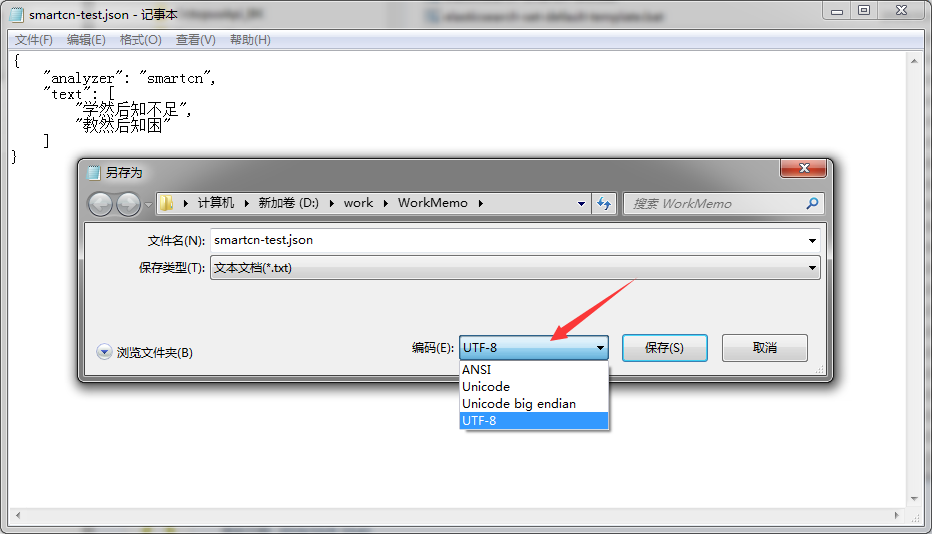

将 -d 参数的 json 内容保存到 smartcn-test.json 文件,文件的编码格式必须为 UTF-8(可以通过 记事本 -> 另存为 -> 编码 来确认)。

命令修改为如下格式并执行:

bash

curl -XGET "localhost:9200/_analyze?pretty" -H "Content-Type: application/json" -d@smartcn-test.json > smartcn-test-result.jsonsmartcn-test.json 文件内容:

json

{

"analyzer": "smartcn",

"text": [

"学然后知不足",

"教然后知困"

]

}执行后 smartcn-test-result.json 文件内容:

json

{

"tokens" : [

{

"token" : "学",

"start_offset" : 0,

"end_offset" : 1,

"type" : "word",

"position" : 0

},

{

"token" : "然后",

"start_offset" : 1,

"end_offset" : 3,

"type" : "word",

"position" : 1

},

{

"token" : "知",

"start_offset" : 3,

"end_offset" : 4,

"type" : "word",

"position" : 2

},

{

"token" : "不足",

"start_offset" : 4,

"end_offset" : 6,

"type" : "word",

"position" : 3

},

{

"token" : "教",

"start_offset" : 7,

"end_offset" : 8,

"type" : "word",

"position" : 4

},

{

"token" : "然后",

"start_offset" : 8,

"end_offset" : 10,

"type" : "word",

"position" : 5

},

{

"token" : "知",

"start_offset" : 10,

"end_offset" : 11,

"type" : "word",

"position" : 6

},

{

"token" : "困",

"start_offset" : 11,

"end_offset" : 12,

"type" : "word",

"position" : 7

}

]

}为了对比,测试一下默认的分析器。打开如下链接即可测试默认的分析器的分词结果:

http://localhost:9200/_analyze?text=学然后知不足%20教然后知困

json

{

"tokens": [

{

"token": "学",

"start_offset": 0,

"end_offset": 1,

"type": "<IDEOGRAPHIC>",

"position": 0

},

{

"token": "然",

"start_offset": 1,

"end_offset": 2,

"type": "<IDEOGRAPHIC>",

"position": 1

},

{

"token": "后",

"start_offset": 2,

"end_offset": 3,

"type": "<IDEOGRAPHIC>",

"position": 2

},

{

"token": "知",

"start_offset": 3,

"end_offset": 4,

"type": "<IDEOGRAPHIC>",

"position": 3

},

{

"token": "不",

"start_offset": 4,

"end_offset": 5,

"type": "<IDEOGRAPHIC>",

"position": 4

},

{

"token": "足",

"start_offset": 5,

"end_offset": 6,

"type": "<IDEOGRAPHIC>",

"position": 5

},

{

"token": "教",

"start_offset": 7,

"end_offset": 8,

"type": "<IDEOGRAPHIC>",

"position": 6

},

{

"token": "然",

"start_offset": 8,

"end_offset": 9,

"type": "<IDEOGRAPHIC>",

"position": 7

},

{

"token": "后",

"start_offset": 9,

"end_offset": 10,

"type": "<IDEOGRAPHIC>",

"position": 8

},

{

"token": "知",

"start_offset": 10,

"end_offset": 11,

"type": "<IDEOGRAPHIC>",

"position": 9

},

{

"token": "困",

"start_offset": 11,

"end_offset": 12,

"type": "<IDEOGRAPHIC>",

"position": 10

}

]

}